Se i dati sono il nuovo petrolio, l’AI è la nuova elettricità!

Si creano più dati ogni ora oggi di quanti se ne creassero in un intero anno solo due decenni fa, e si stima che i dati globali raddoppieranno ogni due anni, ma solo l’1% viene effettivamente catturato, salvato o utilizzato su base globale. Ciò sta per cambiare con l’introduzione di grandi modelli di elaborazione del linguaggio (l’Intelligenza Artificiale) e se è innegabile che l’uomo potrà trarne benefici, è altrettanto vero che dovrà affrontarne le sfide: dagli impatti sulla corporate governance al contenimento delle emissioni prodotte.

Leggi l'articolo in inglese

…If data is the new oil, then AI is the new electricity!

More data is created per hour today than in an entire year just two decades ago and global data is expected to double every 2 years.

For example, every minute of the day 5.9 million searches happen on Google, there are 347.2K new tweets and Amazon shoppers spend $443,000 USD.

In most computer systems, a unit of data is called a “byte” and it is used to represent a character such as a letter or a number. We are now entering the age of the Yottabyte, that is the largest unit in our metric system, the factor of 10 24(1,000,000,000,000,000,000,000,000), equivalent to the information contained in 250 trillion of DVDs.

Generative AI Models

Despite this very large production of data, only 1% is actually being captured, stored and used on a global basis: this is about to change with large language models, such ase ChatGPT, finally enabling us to fully capitalize on the data revolution.

Until now, AI could read and write but could not actually understand content. Generative AI models, such as ChatGPT, GitHub Copilot along with advanced AI models such as Stable Diffusion, DALL-E 2, and GPT-3 have changed this pushing technology into new dimensions that were once reserved for humans, enabling machines to understand natural language and produce human-like dialogue and content.

With generative AI, computers are now able to exhibit creativity and produce original content in response to queries, drawing from their knowledge and interactions with users.

These generative AI systems are based on large-scale, deep learning models that have been trained on massive, unstructured data sets, and can be adapted for a wide range of use cases with minimal fine-tuning required.

- Level 1 is basic control programming used for marketing purposes in household products.

- Level 2 is classic AI seen in chess games, cleaning robots, and question-answering AI.

- Level 3 is AI with machine learning used in search engines and decision making based on Big Data.

- Level 4 is AI with independent programmed generation, where computers can learn feature values used for machine learning, such as deep learning and leap learning. It involves using stacked neural networks to extract high-dimensional feature values from data.

Large language model-based chatbots, like ChatGPT, democratize data and make it accessible to all without the need for training or experience. As such, mass adoption is unprecedented: It took ChatGPT just 5 days to reach 1 million users, 1 billion cumulative visits in 3 months and an adoption rate which is 3x TikTok’s and 10x Instagram’s.

The technology is developing exponentially. In the last decade, computing power to train the AI datasets doubled every 3 months, outpacing Moore’s Law* by a factor of 6x. In the past 4 years the number of parameters for large language models grew 1,900x. And within a decade, AI models could be 1 million times more powerful than ChatGPT today.

The impact of AI on the Global Economy

With total private investments doubling between 2020-21, we are now entering the fifth industrial revolution with the global AI market expected to grow to $900bn by 2026.

Every sector will be impacted, but the immediate beneficiaries include tech hardware (semis, GPUs, data centers), software (cloud, analytics) and cyber (phishing).

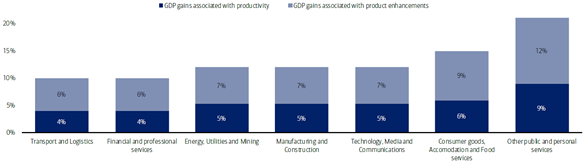

The services industry (health, education, public services and recreation) is set to gain the most (GDP +21% by 2030). This is mainly due to the healthcare sector, which should see greater personalization and quality improvement in the medical advice. As an example, Healthcare professionals could improve the patient experience by using virtual assistants and camera-based healthcare apps in diagnosing medical conditions. Conversely, transport and logistics, financial and professional services are estimated to see smaller GDP gains of c.10%

Overall, generative AI systems, such as ChatGPT, could boost the world economy by up to $15.7tn by 2030 and AI could double annual global economic growth rates by 2035.

AI is likely to drive this in three different ways:

i) leading to a strong increase in labor productivity (by up to 40%) due to automation;

ii) solving problems and self-learning;

iii) Expanding innovation in the broader economy

We carried out an experiment...

…we asked ChatGPT and Dall-E 2 some questions

Weighting positive and negative impacts and looking at ESG

In the last decade, computing power to train the AI datasets doubled every 3 months, outpacing Moore’s Law* by a factor of 6x. Moore’s Law won’t catch up with AI and data. The total data we are creating is doubling every 2-3 years or in other words at a c.50-60% CAGR, which is greater than the increases in CPU processing power. Now that we are entering the era of complex data, the problem could even intensify. In a few years, CPU processing power will have to match the growth of transmission of data and, according to current projections, we are not there. We could reach the limit of Moore’s law this decade.

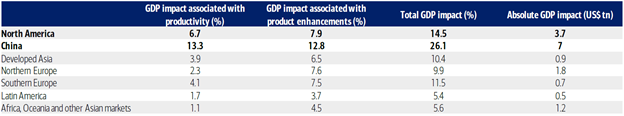

North America and China could see the biggest economic gain in percentage terms from AI. According to analyst estimates, AI is expected to enhance GDP by 26.1% in China and 14.5% in North America in 2030, which accounts for c.70% of the global impact. This is because North America has advanced technological and consumer readiness for AI which enables a faster effect of AI on productivity and overall, a larger effect by 2030. For China, this is because productivity and product enhancements GDP effects are higher than other regions.

The Enviromental impact of Generative AI: to train models based on ever-increasing parameters, Large Language Model (LLMs) require a significant amount of energy and computing power. Training an AI model creates 57x more CO2 emissions than a human generates in a year. It would take 288 years for a single V100 Nvidia GPU to train GPT-3.

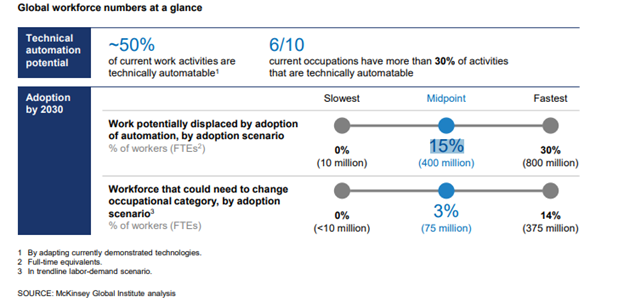

The Social Impact of Generative AI: according to a study by McKinsey, AI could be responsible for 15% of jobs lost (400 million people) between 2016 and 2030. In a scenario of wide AI adoption, jobs lost could rise to as much as 30%. Interestingly, there has been a report** of a chinese company making its CEO a bot. However, we should also mention that Generative AI could trigger upskilling of the workforce.

Finally, generative AI brings corporate governance challenges linked to copyrights and sources of content, among others, with potential for fines or litigation

* Moore’s law isn’t really a law in the legal sense or even a proven theory in the scientific sense (such as E = mc2). Rather, it was an observation by Gordon Moore in 1965 while he was working at Fairchild Semiconductor: the number of transistors on a microchip (as they were called in 1965) doubled about every year. Moore went on to co-found Intel Corporation and his observation became the driving force behind the semiconductor technology revolution at Intel and elsewhere.

** https://www.businessinsider.com/video-game-company-made-bot-its-ceo-stock-climbed-2023-3?r=US&IR=T

Sources:

Bank of America, Goldman Sachs Research, College of Information and Computer Science at the University of Massachusetts Amherst KnowHow, Hewlett Packard, International Data Corporation, http://ymatsuo.com/, OpenAI, Eurizon Capital (internal elaboration), Mckinsey &Co.

Disclaimer:

Nothing in this document is intended as investment research or as a marketing communication, nor as a recommendation or suggestion, express or implied, with respect to an investment strategy concerning the financial instruments managed or issued by Eurizon Capital SGR S.p.A.. Neither is this document a solicitation or offer, investment, legal, tax or other advice.

The opinions, forecasts or estimates contained herein are made with reference only to the date of preparation, and there can be no assurance that results or any future events will be consistent with the opinions, forecasts or estimates contained herein. The information provided and opinions contained are based on sources believed to be reliable and in good faith. However, no representation or warranty, express or implied, is made by Eurizon Capital SGR S.p.A. as to the accuracy, completeness or fairness of the information provided.

Any information contained in the present document may, after the date of its preparation, be subject to modification or updating.